Stimulus modality

Stimulus modality, also called sensory modality, is one aspect of a stimulus or what is perceived after a stimulus. For example, the temperature modality is registered after heat or cold stimulate a receptor. Some sensory modalities include: light, sound, temperature, taste, pressure, and smell. The type and location of the sensory receptor activated by the stimulus plays the primary role in coding the sensation. All sensory modalities work together to heighten stimuli sensation when necessary.[1]

Multimodal perception

[edit]Multimodal perception is the ability of the mammalian nervous system to combine all of the different inputs of the sensory nervous system to result in an enhanced detection or identification of a particular stimulus. Combinations of all sensory modalities are done in cases where a single sensory modality results in an ambiguous and incomplete result.[1]

Integration of all sensory modalities occurs when multimodal neurons receive sensory information which overlaps with different modalities. Multimodal neurons are found in the superior colliculus;[1] they respond to the versatility of various sensory inputs. The multimodal neurons lead to change of behavior and assist in analyzing behavior responses to certain stimulus.[1] Information from two or more senses is encountered. Multimodal perception is not limited to one area of the brain: many brain regions are activated when sensory information is perceived from the environment.[2] In fact, the hypothesis of having a centralized multisensory region is receiving continually more speculation, as several regions previously uninvestigated are now considered multimodal. The reasons behind this are currently being investigated by several research groups, but it is now understood to approach these issues from a decentralized theoretical perspective. Moreover, several labs using invertebrate model organisms will provide invaluable information to the community as these are more easily studied and are considered to have decentralized nervous systems.

Lip reading

[edit]Lip reading is a multimodal process for humans.[2] By watching movements of lips and face, humans get conditioned and practice lip reading.[2] Silent lip reading activates the auditory cortex. When sounds are matched or mismatched with the movements of the lips, temporal sulcus of the left hemisphere becomes more active.[2]

Integration effect

[edit]Multimodal perception comes into effect when a unimodal stimulus fails to produce a response. Integration effect is applied when the brain detects weak unimodal signals and combines them to create a multimodal perception for the mammal. Integration effect is plausible when different stimuli are coincidental. This integration is depressed when multisensory information is not coincidentally presented.[2]

Polymodality

[edit]Polymodality is the feature of a single receptor of responding to multiple modalities, such as free nerve endings which can respond to temperature, mechanical stimuli (touch, pressure, stretch) or pain (nociception).

Light modality

[edit]

Description

[edit]The stimulus modality for vision is light; the human eye is able to access only a limited section of the electromagnetic spectrum, between 380 and 760 nanometres.[3] Specific inhibitory responses that take place in the visual cortex help create a visual focus on a specific point rather than the entire surrounding.[4]

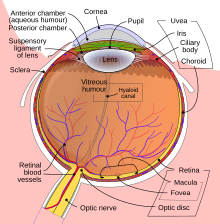

Perception

[edit]To perceive a light stimulus, the eye must first refract the light so that it directly hits the retina. Refraction in the eye is completed through the combined efforts of the cornea, lens and iris. The transduction of light into neural activity occurs via the photoreceptor cells in the retina. When there is no light, Vitamin A in the body attaches itself to another molecule and becomes a protein. The entire structure consisting of the two molecules becomes a photopigment. When a particle of light hits the photoreceptors of the eye, the two molecules come apart from each other and a chain of chemical reactions occurs. The chemical reaction begins with the photoreceptor sending a message to a neuron called the bipolar cell through the use of an action potential, or nerve impulse. Finally, a message is sent to the ganglion cell and then finally the brain.[5]

Adaptation

[edit]The eye is able to detect a visual stimulus when the photons (light packets) cause a photopigment molecule, primarily rhodopsin, to come apart. Rhodopsin, which is usually pink, becomes bleached in the process. At high levels of light, photopigments are broken apart faster than can be regenerated. Because a low number of photopigments have been regenerated, the eyes are not sensitive to light. When entering a dark room after being in a well lit area, the eyes require time for a good quantity of rhodopsin to regenerate. As more time passes, there is a higher chance that the photons will split an unbleached photopigment because the rate of regeneration will have surpassed the rate of bleaching. This is called adaptation.[5]

Colour stimuli

[edit]Humans are able to see an array of colours because light in the visible spectrum is made up of different wavelengths (from 380 to 760 nm). Our ability to see in colour is due to three different cone cells in the retina, containing three different photopigments. The three cones are each specialized to best pick up a certain wavelength (420, 530 and 560 nm or roughly the colours blue, green and red). The brain is able to distinguish the wavelength and colour in the field of vision by figuring out which cone has been stimulated. The physical dimensions of colour include wavelength, intensity and purity while the related perceptual dimensions include hue, brightness and saturation.[5]

Primates are the only mammals with colour vision.[5]

The Trichromatic theory was proposed in 1802 by Thomas Young. According to Young, the human visual system is able to create any colour through the collection of information from the three cones. The system will put together the information and systematize a new colour based on the amount of each hue that has been detected.[5]

Subliminal visual stimuli

[edit]Some studies show that subliminal stimuli can affect attitude. In a 1992 study Krosnick, Betz, Jussim and Lynn conducted a study where participants were shown a series of slides in which different people were going through normal every day activities (i.e. going to the car, sitting in a restaurant). These slides were preceded by slides that caused either positive emotional arousal (i.e. bridal couple, a child with a Mickey Mouse doll) or negative emotional arousal (i.e. a bucket of snakes, a face on fire) for a period 13 milliseconds that participants consciously perceived as a sudden flash of light. None of the individuals were told of the subliminal images. The experiment found that during the questionnaire round, participants were more likely to assign positive personality traits to those in the pictures that were preceded by the positive subliminal images and negative personality traits to those in the pictures that were preceded by the negative subliminal images.[6]

Tests

[edit]Some common tests that measure visual health include visual acuity tests, refraction tests, visual field tests and colour vision tests. Visual acuity tests are the most common tests and they measure the ability to bring details into focus at different distances. Usually this test is conducted by having participants read a map of letters or symbols while one eye is covered. Refraction tests measure the eye's need for glasses or corrective lenses. This test is able to detect whether a person may be nearsighted or farsighted. These conditions occur when the light rays entering the eye are unable to converge on a single spot on the retina. Both refractive errors require corrective lenses in order to cure blurriness of vision. Visual field tests detect any gaps in peripheral vision. In healthy normal vision, an individual should be able to partially perceive objects to the left or right of their field of view using both eyes at one time. The center field of vision is seen in most detail. Colour vision tests are used to measure one's ability to distinguish colours. It is used to diagnose colour blindness. This test is also used as an important step in some job screening processes as the ability to see colour in such jobs may be crucial. Examples include military work or law enforcement.[7]

Sound modality

[edit]

Description

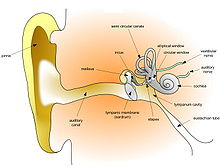

[edit]The stimulus modality for hearing is sound. Sound is created through changes in the pressure of the air. As an object vibrates, it compresses the surrounding molecules of air as it moves towards a given point and expands the molecules as it moves away from the point. Periodicity in sound waves is measured in hertz. Humans, on average, are able to detect sounds as pitched when they contain periodic or quasi-periodic variations that fall between the range of 30 to 20000 hertz.[5]

Perception

[edit]When there are vibrations in the air, the eardrum is stimulated. The eardrum collects these vibrations and sends them to receptor cells. The ossicles which are connected to the eardrum pass the vibrations to the fluid-filled cochlea. Once the vibrations reach the cochlea, the stirrup (part of the ossicles) puts pressure on the oval window. This opening allows the vibrations to move through the liquid in the cochlea where the receptive organ is able to sense it.[5]

Pitch, loudness and timbre

[edit]There are many different qualities in sound stimuli including loudness, pitch and timbre.[5]

The human ear is able to detect differences in pitch through the movement of auditory hair cells found on the basilar membrane. High frequency sounds will stimulate the auditory hair cells at the base of the basilar membrane while medium frequency sounds cause vibrations of auditory hair cells located at the middle of the basilar membrane. For frequencies that are lower than 200 Hz, the tip of the basilar membrane vibrates in sync with the sound waves. In turn, neurons are fired at the same rate as the vibrations. The brain is able to measure the vibrations and is then aware of any low frequency pitches.[5]

When a louder sound is heard, more hair cells are stimulated and the intensity of firing of axons in the cochlear nerve is increased. However, because the rate of firing also defines low pitch the brain has an alternate way of encoding for loudness of low frequency sounds. The number of hair cells that are stimulated is thought to communicate loudness in low pitch frequencies.[5]

Aside from pitch and loudness, another quality that distinguishes sound stimuli is timbre. Timbre allows us to hear the difference between two instruments that are playing at the same frequency and loudness, for example. When two simple tones are put together they create a complex tone. The simple tones of an instrument are called harmonics or overtones. Timbre is created by putting the harmonics together with the fundamental frequency (a sound's basic pitch). When a complex sound is heard, it causes different parts in the basilar membrane to become simultaneously stimulated and flex. In this way, different timbres can be distinguished.[5]

Sound stimuli and human fetuses

[edit]A number of studies have shown that a human fetus will respond to sound stimuli coming from the outside world.[8][9] In a series of 214 tests conducted on 7 pregnant women, a reliable increase in fetal movement was detected in the minute directly following the application of a sound stimulus to the abdomen of the mother with a frequency of 120 per second.[8]

Tests

[edit]Hearing tests are administered to ensure optimal function of the ear and to observe whether or not sound stimuli is entering the ear drum and reaching the brain as should be. The most common hearing tests require the spoken response to words or tones. Some hearing tests include the whispered speech test, pure tone audiometry, the tuning fork test, speech reception and word recognition tests, otoacoustic emissions (OAE) test and auditory brainstem response (ABR) test.[10]

During a whispered speech test, the participant is asked to cover the opening of one ear with a finger. The tester will then step back 1 to 2 feet behind the participant and say a series of words in a soft whisper. The participant is then asked to repeat what is heard. If the participant is unable to distinguish the word, the tester will speak progressively louder until the participant is able to understand what is being said. The other ear is then tested.[10]

In pure tone audiometry, an audiometer is used to play a series of tones using headphones. The participants listen to the tones which will vary in pitch and loudness. The test will play with the volume controls and the participant is asked to signal when he or she can no longer hear the tone being played. The testing is completed after listening to a range of pitches. Each ear is tested individually.[10]

During the tuning fork test, the tester will have the tuning fork vibrate so that it makes a sound. The tuning fork is placed in a specific place around the participant and hearing is observed. In some instances, individuals will show trouble hearing in places such as behind the ear.[10]

Speech recognition and word recognition tests measure how well an individual can hear normal day-to-day conversation. The participant is told to repeat conversation being spoken at different volumes. The spondee threshold test is a related test that detects the loudness at which the participant is able to repeat half of a list of two syllable words or spondees.[10]

Otoacoustic emissions test (OAE) and auditory brainstem response (ABR) testing measures the brain's response to sounds. The OAE measures hearing of newborns by placing an emitting sound into the baby's ear through a probe. A microphone placed in the baby's ear canal will pick up the inner ear's response to sound stimulation and allows for observation. The ABR, also known as the brainstem auditory evoked response (BAER) test or auditory brainstem evoked potential (ABEP) test measure the brain's response to clicking sounds sent through headphones. Electrodes on the scalp and earlobes record a graph of the response.[10]

Taste modality

[edit]Description

[edit]Taste modality in mammals

[edit]In mammals, taste stimuli are encountered by axonless receptor cells located in taste buds on the tongue and pharynx. Receptor cells disseminate onto different neurons and convey the message of a particular taste in a single medullar nucleus. This pheromone detection system deals with taste stimuli. The pheromone detection system is distinct from the normal taste system, and is designed like the olfactory system.[11]

Taste modality in flies and mammals

[edit]In insect and mammalian taste, receptor cells changes into attractive or aversive stimulus. The number of taste receptors in a mammalian tongue and on the tongue of the fly (labellum) is same in amount. Most of the receptors are dedicated to detect repulsive ligand.[11]

Perception

[edit]Perceptions of taste is generated by the following sensory afferents: gustatory, olfactory, and somatosensory fibers. Taste perception is created by combining multiple sensory inputs. Different modalities help determine perception of taste especially when attention is drawn to particular sensory characteristics which is different from taste.[1]

Integration of taste and smell modality

[edit]Impression of both taste and smell occurs in heteromodal regions of the limbic and paralimbic brain. Taste–odor integration occurs at earlier stages of processing. By life experience, factors such as the physiological significance of a given stimulus is perceived. Learning and affective processing are the primary functions of limbic and paralimbic brain. Taste perception is a combination of oral somatosensation and retronasal olfaction.[1]

Pleasure of food

[edit]The sensation of taste come from oral somatosensory stimulation and with retronasal olfaction. The perceived pleasure encountered when eating and drinking is influenced by:

- sensory features, such as taste quality

- experience, such as prior exposure to taste-odor mixtures

- internal state

- cognitive context, such as information about brand[12]

Temperature modality

[edit]Description

[edit]Temperature modality excites or elicits a symptom through cold or hot temperature.[13] Different mammalian species have different temperature modality.[14]

Perception

[edit]The cutaneous somatosensory system detects changes in temperature. The perception begins when thermal stimuli from a homeostatic set-point excite temperature specific sensory nerves in the skin. Then with the help of sensing range, specific thermosensory fibers respond to warmth and to cold. Then specific cutaneous cold and warm receptors conduct units that exhibit a discharge at constant skin temperature.[15]

Nerve fibers for temperature

[edit]Warm and cold sensitive nerve fibers differ in structure and function. The cold-sensitive and warm-sensitive nerve fibers are underneath the skin surface. Terminals of each temperature-sensitive fiber do not branch away to different organs in the body. They form a small sensitive point which are unique from neighboring fibers. Skin used by the single receptor ending of a temperature-sensitive nerve fiber is small. There are 20 cold points per square centimeter in the lips, 4 in the finger, and less than 1 cold point per square centimeter in trunk areas. There are 5 times as many cold sensitive points as warm sensitive points.[15]

Pressure modality

[edit]Description

[edit]The sense of touch, or tactile perception, is what allows organisms to feel the world around them. The environment acts as an external stimulus, and tactile perception is the act of passively exploring the world to simply sense it. To make sense of the stimuli, an organism will undergo active exploration, or haptic perception, by moving their hands or other areas with environment-skin contact.[16] This will give a sense of what is being perceived, and give information about size, shape, weight, temperature, and material. Tactile stimulation can be direct in the form of bodily contact, or indirect through the use of a tool or probe. Direct and indirect send different types messages to the brain, but both provide information regarding roughness, hardness, stickiness, and warmth. The use of a probe elicits a response based on the vibrations in the instrument rather than direct environmental information.[17] Tactual perception gives information regarding cutaneous stimuli (pressure, vibration, and temperature), kinaesthetic stimuli (limb movement), and proprioceptive stimuli (position of the body).[18] There are varying degrees of tactual sensitivity and thresholds, both between individuals and between different time periods in an individual's life.[19] It has been observed that individuals have differing levels of tactile sensitivity between each hand. This may be due to callouses forming on the skin of the most used hand, creating a buffer between the stimulus and the receptor. Alternately, the difference in sensitivity may be due to a difference in the cerebral functions or ability of the left and right hemisphere.[20] Tests have also shown that deaf children have a greater degree of tactile sensitivity than that of children with normal hearing ability, and that girls generally have a greater degree of sensitivity than that of boys.[21]

Tactile information is often used as additional stimuli to resolve a sensory ambiguity. For example, a surface can be seen as rough, but this inference can only be proven through touching the material. When sensory information from each modality involved corresponds, the ambiguity is resolved.[22]

Somatosensory information

[edit]Touch messages, in comparison to other sensory stimuli, have a large distance to travel to get to the brain. Tactile perception is achieved through the response of mechanoreceptors (cutaneous receptors) in the skin that detect physical stimuli. The response from a mechanoreceptor detecting pressure can be experienced as a touch, discomfort, or pain.[23] Mechanoreceptors are situated in highly vascularized skin, and appear in both glabrous and hairy skin. Each mechanoreceptor is tuned to a different sensitivity, and will fire its action potential only when there is enough energy.[24] The axons of these single tactile receptors will converge into a single nerve trunk, and the signal is then sent to the spinal cord where the message makes its way to the somatosensory system in the brain.

Mechanoreceptors

[edit]There are four types of mechanoreceptors: Meissner corpuscles and merkel cell neurite complexes, located between the epidermis and dermis, and Pacinian corpuscles and Ruffini endings, located deep within the dermis and subcutaneous tissue. Mechanoreceptors are classified in terms of their adaptation rate and the size of their receptive field. Specific mechanoreceptors and their functions include:[25]

- Thermoreceptors that detect changes in skin temperature.

- Kinesthetic receptors detect movements of the body, and the position of the limbs.

- Nociceptors that have bare nerve endings that detect tissue damage and give the sensation of pain.

Tests

[edit]A common test used to measure the sensitivity of a person to tactile stimuli is measuring their two-point touch threshold. This is the smallest separation of two points at which two distinct points of contact can be sensed rather than one. Different parts of the body have different degrees of tactile acuity, with extremities such as the fingers, face, and toes being the most sensitive. When two distinct points are perceived, it means that your brain receives two different signals. The differences of acuity for different parts of the body are the result of differences in the concentration of receptors.[25]

Use in clinical psychology

[edit]Tactile stimulation is used in clinical psychology through the method of prompting. Prompting is the use of a set of instructions designed to guide a participant through learning a behavior. A physical prompt involves stimulation in the form of physically guided behavior in the appropriate situation and environment. The physical stimulus perceived through prompting is similar to the physical stimulus that would be experienced in a real-world situation, and is makes the target behavior more likely in a real situation.[26]

Smell modality

[edit]Sensation

[edit]The sense of smell is called olfaction. All materials constantly shed molecules, which float into the nose or are sucked in through breathing. Inside the nasal chambers is the neuroepithelium, a lining deep within the nostrils that contains the receptors responsible for detecting molecules that are small enough to smell. These receptor neurons then synapse at the olfactory cranial nerve (CN I), which sends the information to the olfactory bulbs in the brain for initial processing. The signal is then sent to the remaining olfactory cortex for more complex processing.[27]

Odors

[edit]An olfactory sensation is called an odor. For a molecule to trigger olfactory receptor neurons, it must have specific properties. The molecule must be:

- volatile (able to float through the air)

- small (less than 5.8 x 10-22 grams)

- hydrophobic (repellant to water)

However, humans do not detect or process the smell of various common molecules such as nitrogen or water vapor.

Olfactory ability can vary due to different conditions. For example, olfactory detection thresholds can change due to molecules with differing lengths of carbon chains. A molecule with a longer carbon chain is easier to detect, and has a lower detection threshold. Additionally, women generally have lower olfactory thresholds than men, and this effect is magnified during a woman's ovulatory period.[25] People can sometimes experience a hallucination of smell, as in the case of phantosmia.

Interaction with other modalities

[edit]Olfaction interacts with other sensory modalities in significant ways. The strongest interaction is that of olfaction with taste. Studies have shown that an odor coupled with a taste increases the perceived intensity of the taste, and that an absence of a corresponding smell decreases the perceived intensity of a taste. The olfactory stimulation can occur before or during the episode of taste stimulation. The dual perception of the stimulus produces an interaction that facilitates association of the experience through an additive neural response and memorization of the stimulus. This association can also be made between olfactory and tactile stimuli during the act of swallowing. In each case, temporal synchrony is important.[28]

Tests

[edit]A common psychophysical test of olfactory ability is the triangle test. In this test, the participant is given three odors to smell. Of these three odors, two are the same and one is different, and the participant must choose which odor is the unique one. To test the sensitivity of olfaction, the staircase method is often used. In this method, the odor's concentration is increased until the participant is able to sense it, and subsequently decreased until the participant reports no sensation.[25]

See also

[edit]- Autonomous sensory meridian response

- Crossmodal Attention

- Synesthesia (Ideasthesia)

- Modality (semiotics)

- Pallesthesia

References

[edit]- ^ a b c d e f Small, Dana M.; Prescott, John (19 July 2005). "Odor/taste integration and the perception of flavor". Experimental Brain Research. 166 (3–4): 345–357. doi:10.1007/s00221-005-2376-9. PMID 16028032. S2CID 403254.

- ^ a b c d e Ivry, Richard (2009). Cognitive Neuroscience: The biology of the mind. New York: W.W. Norton and Company. p. 199. ISBN 978-0-393-92795-5.

- ^ Russell, J.P; Wolfe, S.L.; Hertz, P.E.; Starr, C.; Fenton, M. B.; Addy, H.; Denis, M.; Haffie, T.; Davey, K. (2010). Biology: Exploring the Diversity of Life, First Canadian Edition, Volume Three. Nelson Education. pp. 833–840. ISBN 978-0-17-650231-7.

- ^ Yarbrough, Cathy. "Brains response to visual stimuli helps us to focus on what we should see, rather than all there is to see". EurekAlert!. Retrieved 29 July 2012.

- ^ a b c d e f g h i j k Carlson, N. R.; et al. (2010). Psychology: The Science of Behaviour. Toronto, Ontario: Pearson Education Canada. ISBN 978-0-205-64524-4.

- ^ Krosnick, J. A.; Betz, A. L.; Jussim, L. J.; Lynn, A. R. (1992). "Subliminal Conditioning of Attitudes". Personality and Social Psychology Bulletin. 18 (2): 152–162. doi:10.1177/0146167292182006. S2CID 145504287.

- ^ Healthwise Staff. "Vision Tests". WebMD. Retrieved 29 July 2012.

- ^ a b Sontag, L. W. (1936). "Changes in the Rate of the Human Fetal Heart in Response to Vibratory Stimuli". Archives of Pediatrics & Adolescent Medicine. 51 (3): 583–589. doi:10.1001/archpedi.1936.01970150087006.

- ^ Forbes, H. S.; Forbes, H. B. (1927). "Fetal sense reaction: Hearing". Journal of Comparative Psychology. 7 (5): 353–355. doi:10.1037/h0071872.

- ^ a b c d e f Healthwise Staff. "Hearing Tests". WebMD. Retrieved 29 July 2012.

- ^ a b Stocker, Reinhard F (1 July 2004). "Taste Perception: Drosophila – A Model of Good Taste". Current Biology. 14 (14): R560–R561. doi:10.1016/j.cub.2004.07.011. PMID 15268874.

- ^ SMALL, D. M.; BENDER, G.; VELDHUIZEN, M. G.; RUDENGA, K.; NACHTIGAL, D.; FELSTED, J. (10 September 2007). "The Role of the Human Orbitofrontal Cortex in Taste and Flavor Processing". Annals of the New York Academy of Sciences. 1121 (1): 136–151. Bibcode:2007NYASA1121..136S. doi:10.1196/annals.1401.002. PMID 17846155. S2CID 7934796.

- ^ "Temperature modality".

- ^ Bodenheimer, F. S (1941). "Observations on Rodents in Herter's Temperature Gradient". Physiological Zoology. 14 (2): 186–192. doi:10.1086/physzool.14.2.30161738. JSTOR 30161738. S2CID 87698999.

- ^ a b McGlone, Francis; Reilly, David (2010). "The cutaneous sensory system". Neuroscience & Biobehavioral Reviews. 34 (2): 148–159. doi:10.1016/j.neubiorev.2009.08.004. PMID 19712693. S2CID 9472588.

- ^ Reuter E.; Voelcker-Rehage C.; Vieluf S.; Godde B. (2012). "Touch perception throughout working life: Effects of age and expertise". Experimental Brain Research. 216 (2): 287–297. doi:10.1007/s00221-011-2931-5. PMID 22080104. S2CID 16712201.

- ^ Yoshioka T.; Bensmaïa S.; Craig J.; Hsiao S. (2007). "Texture perception through direct and indirect touch: An analysis of perceptual space for tactile textures in two modes of exploration". Somatosensory & Motor Research. 24 (1–2): 53–70. doi:10.1080/08990220701318163. PMC 2635116. PMID 17558923.

- ^ Bergmann Tiest W (2010). "Tactual perception of material properties". Vision Research. 50 (24): 2775–2782. doi:10.1016/j.visres.2010.10.005. PMID 20937297.

- ^ Angier R (1912). "Tactual and kinæsthetic space". Psychological Bulletin. 9 (7): 255–257. doi:10.1037/h0073444.

- ^ Weinstein S.; Sersen E. (1961). "Tactual sensitivity as a function of handedness and laterality". Journal of Comparative and Physiological Psychology. 54 (6): 665–669. doi:10.1037/h0044145. PMID 14005772.

- ^ Chakravarty A (1968). "Influence of tactual sensitivity on tactual localization, particularly of deaf children". Journal of General Psychology. 78 (2): 219–221. doi:10.1080/00221309.1968.9710435. PMID 5656904.

- ^ Lovelace, Christopher Terry (October 2000). Feature binding across sense modalities: Visual and tactual interactions (Thesis). ProQuest 619577012.

- ^ Xiong, Shuping; Goonetilleke, Ravindra S.; Jiang, Zuhua (March 2011). "Pressure thresholds of the human foot: measurement reliability and effects of stimulus characteristics". Ergonomics. 54 (3): 282–293. doi:10.1080/00140139.2011.552736. PMID 21390958. S2CID 22152573.

- ^ Pawson, Lorraine; Checkosky, Christine M.; Pack, Adam K.; Bolanowski, Stanley J. (January 2008). "Mesenteric and tactile Pacinian corpuscles are anatomically and physiologically comparable". Somatosensory & Motor Research. 25 (3): 194–206. doi:10.1080/08990220802377571. PMID 18821284. S2CID 33152961.

- ^ a b c d Wolfe, J., Kluender, K., & Levi, D. (2009). Sensation and perception. (2 ed.). Sunderland: Sinauer Associates.[page needed]

- ^ Miltenberger, R. (2012). Behavior modification: principles and procedures. (5 ed.). Belmont, CA: Wadsworth.[page needed]

- ^ Doty R (2001). "Olfaction". Annual Review of Psychology. 52 (1): 423–452. doi:10.1146/annurev.psych.52.1.423. PMID 11148312.

- ^ Labbe D.; Gilbert F.; Martin N. (2008). "Impact of olfaction on taste, trigeminal, and texture perceptions". Chemosensory Perception. 1 (4): 217–226. doi:10.1007/s12078-008-9029-x. S2CID 144260061.